Imagine you need to process 1 billion numbers. If you create a List of 1 billion numbers, Python has to create all of them at once and store them in RAM. Your computer will likely crash. This is where Python Generators come in handy. Using a generator allows you to generate values on the fly without occupying too much memory.

A Generator is smarter. It doesn’t create them all at once. It creates them one at a time, only when you ask for them. This is called “lazy evaluation.”

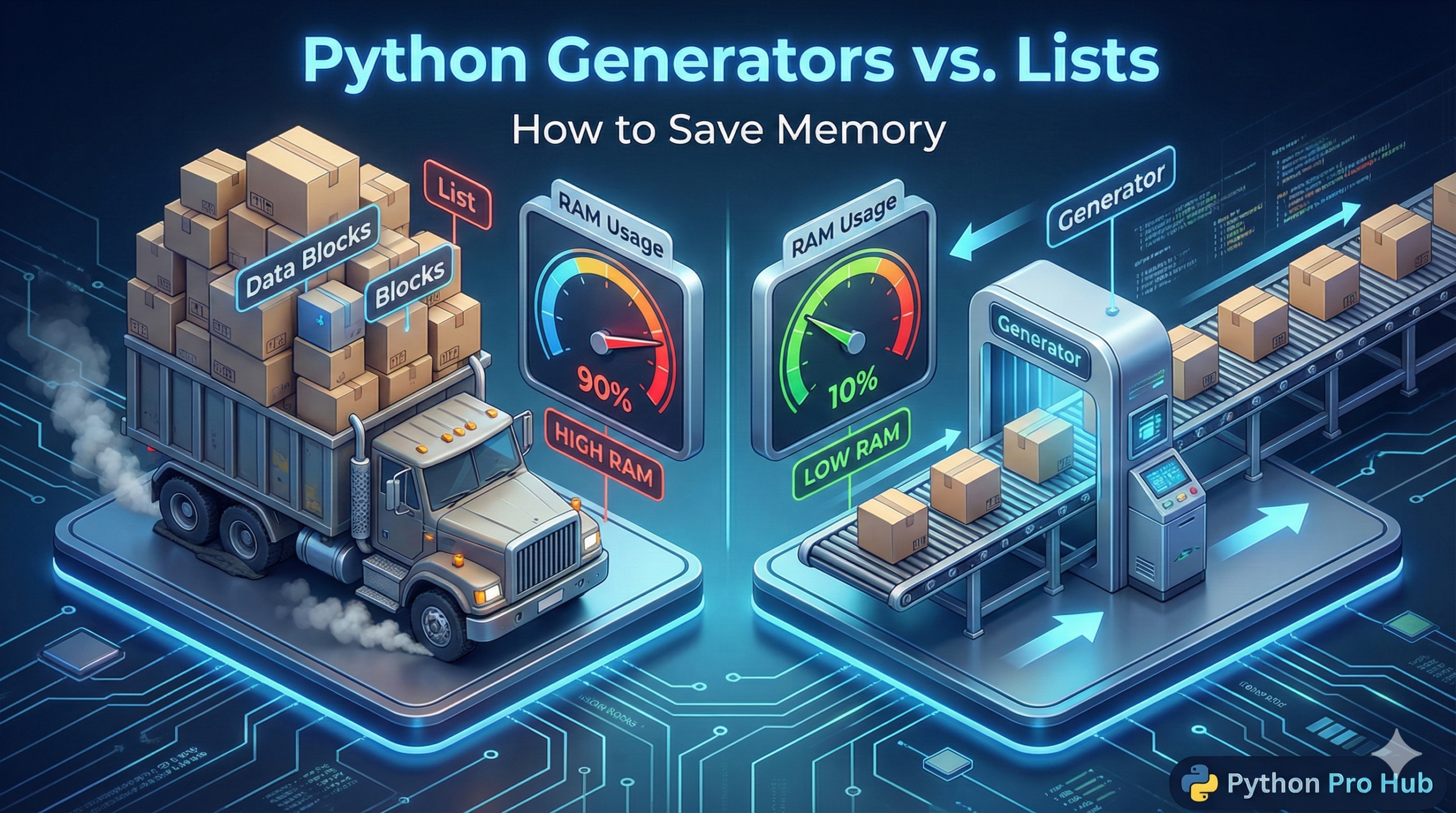

The Visual Difference

- List:

[1, 2, 3, 4, 5](All in memory now) - Generator: A recipe that knows how to get the next number, but hasn’t done it yet with the use of Python generators.

How to Make a Generator (yield)

Instead of return, a generator function in Python uses yield.

def normal_list_function(n):

result = []

for i in range(n):

result.append(i * i)

return result

def generator_function(n):

for i in range(n):

yield i * i # Pause here and give back one valueThe Memory Test

Let’s prove it.

import sys

# A list of 1 million squares

my_list = [i * i for i in range(1000000)]

print(sys.getsizeof(my_list))

# Output: ~8.6 MB of RAM

# A generator of 1 million squares (using () instead of [])

my_gen = (i * i for i in range(1000000))

print(sys.getsizeof(my_gen))

# Output: ~120 Bytes of RAM!The generator is thousands of times smaller because it doesn’t store the data; it just stores the rules to create it. Python generators efficiently manage memory usage.

When to Use Them

Use generators when:

- You are working with a massive dataset (like reading a huge file line-by-line).

- You don’t need to access items randomly (e.g., you don’t need

data[500]), you just need to loop through them once while employing Python’s generators.